Content Summary

This article aims to explore the integration and application of visual technology in intelligent product design. Research on intelligent product design cases shows that through breakthroughs and innovations in cutting-edge computer theory technologies such as artificial intelligence, deep learning, and computer vision, combined with practical needs and user experience issues in daily life, breakthrough intelligent new products can often be created; By analyzing and supplementing the psychological model of product design, the IDUPM model is proposed, with visual technology as one of the entry points to better guide the design of intelligent products. The author points out that designers should strengthen their understanding and transformation of interdisciplinary knowledge from design disciplines to interdisciplinary fields, from technological innovation to productization cognition around "user needs" and "value creation", in order to better promote designers to design more truly useful intelligent products.

Keywords: visual technology, product design, user experience, intelligence

In 2020, the scale of China's core artificial intelligence industry reached 151.2 billion yuan, and breakthroughs and innovations in cutting-edge computer theory and technology represented by deep learning and computer vision are leading the third wave of global artificial intelligence technology. Compared to the first and second wave of artificial intelligence in the 1950s and 1980s, with the further consolidation and promotion of computer underlying technologies such as cloud computing and big data, the third wave of artificial intelligence began to think more, discover and solve many application problems in daily life. With the stimulation of various market factors such as consumer upgrading and intelligent transformation, more and more intelligent products are appearing in various scenarios of life, providing more possibilities for innovative product design. At the same time, visual technology, which symbolizes the "eyes" of machines, is experiencing breakthrough development. Representative products such as "sweeping robots", "driving assistance systems", and "facial recognition payment technology" are emerging one after another, becoming typical applications of the productization of the new generation of artificial intelligence. This article provides an in-depth analysis of the breakthroughs and innovations in the development of artificial intelligence technology represented by visual technology, typical intelligent scenario application cases, etc. Based on the psychological model of product design, the IDUPM model is proposed, and the intelligent product design practice of visual technology is taken as the main case to explore the productization path of current intelligent technology.

The "Eye" of Machine: Breakthroughs and Innovations in Visual Technology

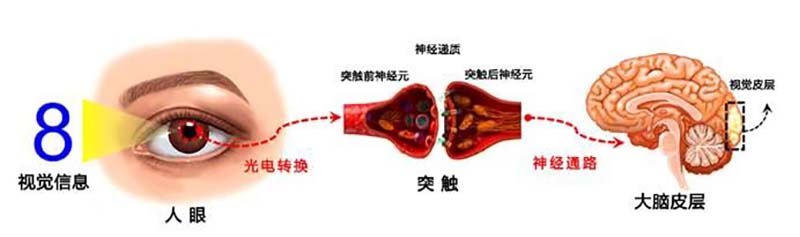

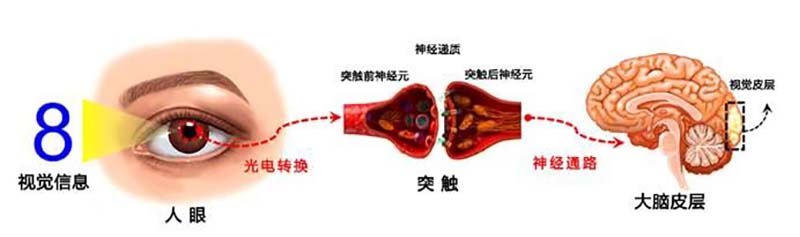

About 70% of the external information received by humans is obtained through visual means. As shown in Figure 1 [1], the image information of the environment is projected onto the retina of the human eye using light as a carrier. The photoreceptor cells on the retina complete the photoelectric conversion of signals, which are then transmitted to the cerebral cortex through synaptic neurons and neural pathways. Through deep processing and interpretation by the primary and advanced visual cortex, the information is ultimately transmitted to other areas of the brain responsible for decision-making, and ultimately fed back into various behaviors.

Figure 1. Working principle of human vision

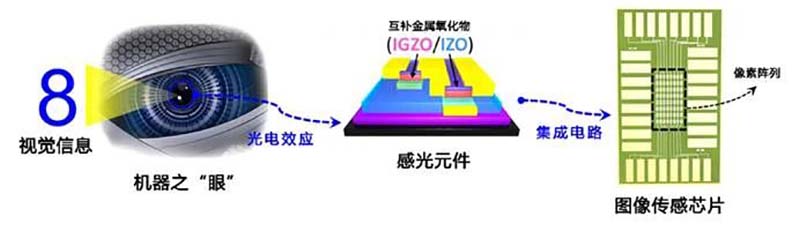

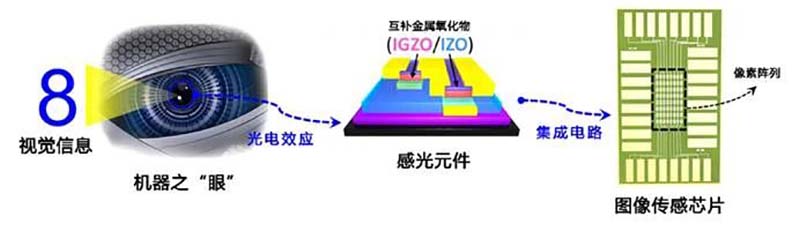

In recent years, with the continuous promotion and innovation of artificial intelligence technology, various sensing technologies, chip technologies, etc. have made breakthroughs in machine vision technology. By designing, establishing, and simulating the information reception, transmission, and processing mechanisms of the biological human eye, machine vision has become more and more like human vision. Optical sensors integrate photosensitive components to collect environmental information, and then obtain effective image pixel array values through specialized integrated circuits and image sensor chip design. By establishing a mapping relationship between values and information, they are ultimately reflected in various corresponding behaviors of machines, becoming the true "eyes" of machines. (Figure 2 [2])

Figure 2. Working principle of machine vision

According to the different division of labor in the processing and processing of environmental information by visual technology, it can be roughly divided into two categories: perceptual technology and cognitive technology. Perception technology relies on optical sensors to collect environmental information, and there are certain differences in the form and depth of visual images obtained based on the type of sensor. For example, two-dimensional visual images, three-dimensional depth images, and infrared spectral images. Select the corresponding type of sensor to perceive the required environmental information based on the different tasks being processed. Therefore, capturing accurate visual information is the key to perception technology, which requires the combination of the hardware part of optical sensors and the perception algorithm part. The hardware part includes CCD, CMOS photosensitive camera, structured light camera, infrared spectrometer, etc; Perception algorithms, such as image dark perception algorithms [3], compression perception algorithms [4], etc., are used to establish accurate mapping between images and data. Perception technology is the selective collection and integration of environmental information. Cognitive technology is an extension of perceptual technology, which involves deeper processing of visual information to achieve more accurate understanding and provide more effective feedback for decision-making. The cognitive process is similar to the processing of information transmitted by the brain to the retina, often using chips and image algorithms with stronger integrated computing power to understand visual information, and judging position movement, distance, meaning, etc. based on the characteristics and changes of the image. Common techniques include deep learning algorithms for segmentation, extraction, and automatic recognition of vehicle types and license plates [5], and convolutional neural networks for facial comparison and security verification [6]. Compared to perceptual technology, cognitive technology is the deep processing of visual information.

With the development of artificial intelligence technology, key technological breakthroughs have been made in visual perception and cognitive technology. For example, Synchronous Localization and Mapping (SLAM) technology enables machines to obtain more accurate real-time location movement information and build environmental maps; Image recognition technology based on deep neural networks can significantly improve the accuracy of facial recognition and target object detection. The breakthroughs and innovations in visual technology are rapidly becoming important research topics in fields such as artificial intelligence, smart cities, robotics, security, and digital entertainment, providing important support for the innovative design and application of intelligent products.

2 Visual Sensors: From Technical Carriers to Product Features

In 1837, French inventor and artist Daguerre invented the world's first camera, including a dark box, lens, and photosensitive material, which can be regarded as the earliest visual recording device. From then on, humans were able to physically record and preserve various images in life, and photography became a new form of artistic expression. In the 1970s, the emergence of photosensitive chip technologies such as CCD and CMOS, as well as digital cameras, opened up new fields of digital imaging and visual sensing, providing rich research materials for future computer vision, image processing, film and animation, and more. In the 21st century, with the development of grating diffraction components and laser projectors, 3D sensors such as LiDAR and motion sensing game consoles have been widely used in hot intelligent fields such as autonomous driving, mobile robots, entertainment interaction, etc., bringing more diverse application scenarios. As a carrier of technology, visual sensors provide more possibilities for innovative design of new artistic expressions, research fields, and intelligent products.

Visual sensors, also known as optical sensors, are mainly composed of optical components, imaging devices, and chips to obtain various image information in the environment. As a carrier of visual technology, visual sensors have always been an important part of driving the progress of visual technology. According to the characteristics of image data, visual sensors can be broadly divided into traditional monocular visual sensors and three-dimensional stereo visual sensors. Monocular visual sensors, which are commonly used single phone cameras, surveillance cameras, cameras, etc., mainly use photosensitive chips such as CCD and CMOS to project color information from a three-dimensional environment onto a two-dimensional image for digital recording and storage; A three-dimensional stereo vision sensor refers to a combination of two or more visual sensors that obtain and record distance information on each image through stereo vision algorithms, structured light spot calibration algorithms, flight time algorithms, etc. For example, the emergence, evolution, and innovation of visual sensors such as the front facing camera of mobile phones with facial recognition unlocking function, and the Kinect game console with somatosensory recognition interaction function (the first generation of somatosensory recognition interaction sensor launched by Microsoft in the United States in 2010) have provided new features and differentiation for intelligent product design.

Taking the development of rear camera sensors for smartphones (Figure 3) as an example, it can be seen that in 2010, Apple in the United States equipped a single 5-megapixel rear camera on the iPhone 4; Nokia's 2012 smartphone, which focuses on shooting functions, directly increased pixel parameters to 40 million; In 2013, Samsung further upgraded and launched a new phone that could achieve large-scale zoom shooting function. With the development of intelligent technology, new highlights have emerged in the camera function of smartphones. In 2018, domestic brand Huawei implemented a three lens combination of rear main camera, ultra wide angle camera, and wide angle camera for the first time in its new Mate20 Pro phone, improving the shooting and framing effects. At the same time, the multiple arrangement and combination of the camera became a major highlight of its flagship intelligent product. Subsequently, many smartphone manufacturers began to introduce multiple camera combinations into their own products to enhance their core competitiveness. In the iPhone 13 Pro, Apple in the United States has upgraded and introduced LiDAR technology lenses based on the combination of ultra wide angle, wide angle, and telephoto cameras, which can achieve precise ranging and 3D scanning, expand the entertainment and interactive experience of the phone, further enhance the competitiveness of the product, and create new selling points for smart products.

Figure 3. Evolution of Mobile Phone Rear Lens

3-1.2010, iPhone 4, single 5-megapixel camera

3-2.2012, Nokia 808, single 40 megapixel camera

3-3.2013, Samsung Galaxy S4, Zoom, single 16 megapixel camera, 24mm ultra wide angle camera

3-4.2018, Huawei Mate20Pro, 40 million ultra clear main camera,+8 million telephoto camera,+20 million ultra wide angle camera

3-5.2020, OPPOReno4Pro, 48 million ultra clear main camera,+13 million telephoto camera,+12 million ultra wide angle camera

3-6-2021, iPhone 13 Pro, 12 megapixel camera system, including telephoto, wide-angle, and ultra wide angle lenses

After rapid development in recent years, visual sensors have not only become a technological carrier and highlight in intelligent product design, but also abstractly become a design "symbol", representing the flagship configuration and high-end choice of intelligent products in the minds of users.

3 Visual Interaction: New Human Computer Interaction Scene Design

Visual based interaction has initiative, similar to the active adaptability of the human eye. Devices applying visual technology can autonomously select, recognize, and track target information in the environment, thereby achieving more natural human-computer interaction scenarios. Visual technology based interaction has the following characteristics:

1. Vectorization: Due to the ability of visual technology to detect target objects in images and achieve target tracking, it is easy to capture the direction and distance of target movement when the target moves within the visual range; Furthermore, when the target moves out of visual range, it can be re captured by actively adjusting the machine posture and collaborating with other machines to search for the target. In recent years, virtual reality scene rendering technology based on large-scale scene human motion capture and extraction has become popular for post production in film and television production.

2. Non contact: Unlike tactile, olfactory, and other senses that require direct contact with the object being tested, visual technology interaction is a non-contact interaction where any movement changes of the target object in the environment can be recognized and responded to accordingly. For example, in an immersive interactive device based on action recognition (Figure 5), the designer designed an "energy field" space that uses visual technology to recognize the movement of each visitor. The ground image will follow the visitor's footsteps and form a colorful road on the floor, changing the animation and images in real-time to create an immersive spatial experience.

Figure 4.2019 Immersive Interactive Exhibition of the EDGE OF GOVERNment World Government Summit

Figure 5. Facial recognition payment in a supermarket shopping settlement scenario

3. High security: The high security of visual recognition based on biological information, such as facial features and special actions, has been widely used in the verification of payment information for user digital wallet accounts, thus creating a new payment method. In 2015, Alipay launched a new payment function "face recognition payment". Compared with traditional currency payment, credit card payment, two-dimensional code payment, fingerprint payment, etc., face recognition payment brings a more natural interactive way of payment scenarios.

Therefore, when designers design intelligent products based on visual technology, analyzing the characteristics of visual interaction technology will help propose innovative human-machine interaction scenarios and bring users a better product design experience.

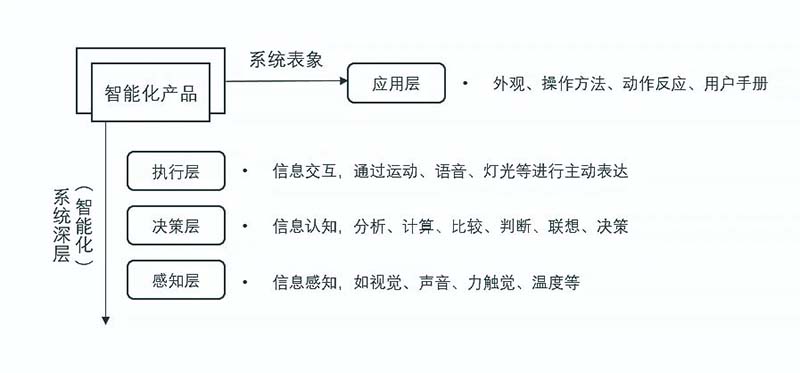

4IDUPM: Establishing a Psychological Model for Intelligent Product Design

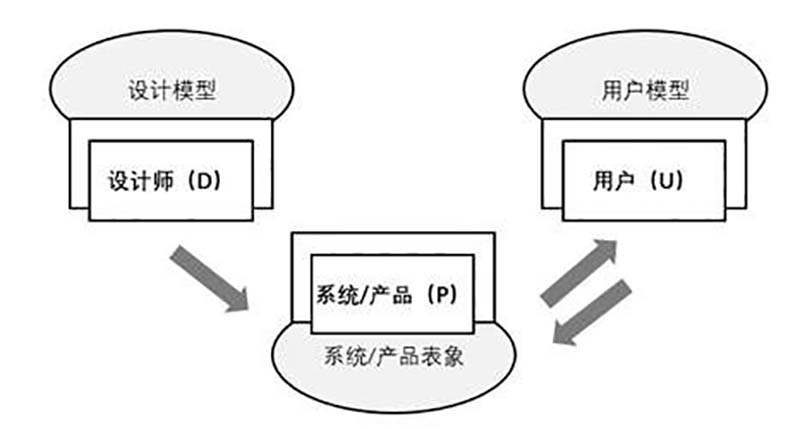

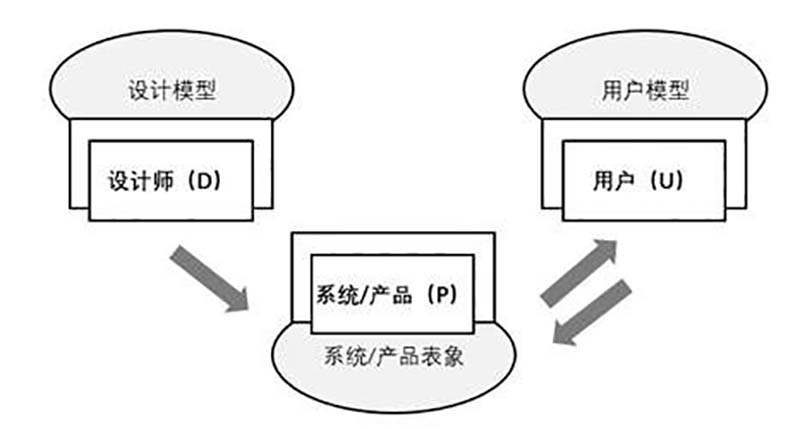

In his book The Design of Everyday Things, Don Norman mentioned that when designing a product, designers need to conform to the DUP model, which includes the design model, user's model, and system/product representation. (Figure 6) Design model refers to the overview of the system (product) in the designer's mind; The user model refers to the user's perception of the operating methods of the system. In an ideal state, the user model should match the design model, and communication between users and designers can only be carried out through the system (product) itself. That is to say, users need to establish a conceptual model through the appearance of the system, operating methods, reactions to operating actions, and user manuals. In the classic psychological model, all the knowledge that users acquire about products comes from the system representation.

Figure 6. Three Elements of Product Design Psychology Model

For intelligent product design, although classic psychological models still have universal theoretical guidance, due to their excessive emphasis on the importance of system (product) representation, they overlook the product itself, especially the perception, memory and thinking, learning and adaptation, and even behavioral decision-making abilities that intelligent products possess. They can actively establish connections with users and designers. Therefore, classic product design psychology models are difficult to provide better practical guidance for current intelligent product design.

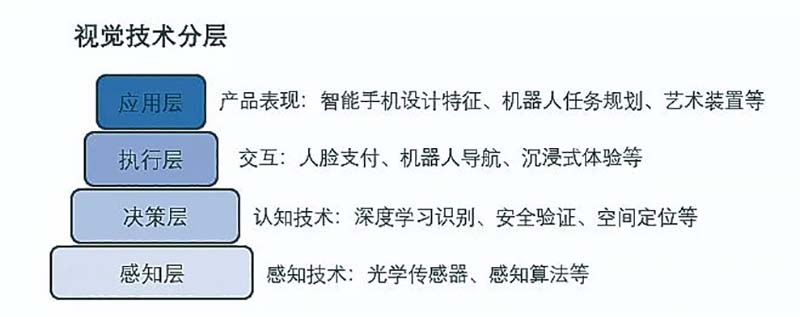

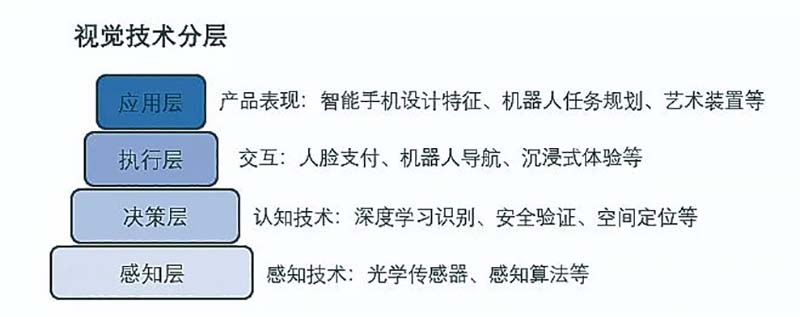

The author believes that for the design of intelligent products, designers not only need to understand and use the system (product) representation, but also need to fully explore the characteristics of technology in intelligence and make use of them, so as to better establish communication with users. Taking the layered design of visual technology as an example, as shown in Figure 7, visual perception technology, cognitive technology, interaction, and product performance correspond one-to-one with perception, decision-making, execution, and application in the layered design model. By fully understanding and analyzing the role, function, and characteristics of visual technology in each layer, designers can better define the functionality and optimize the experience of the product to achieve better product design.

Figure 7. Visual Technology Layered Design Model

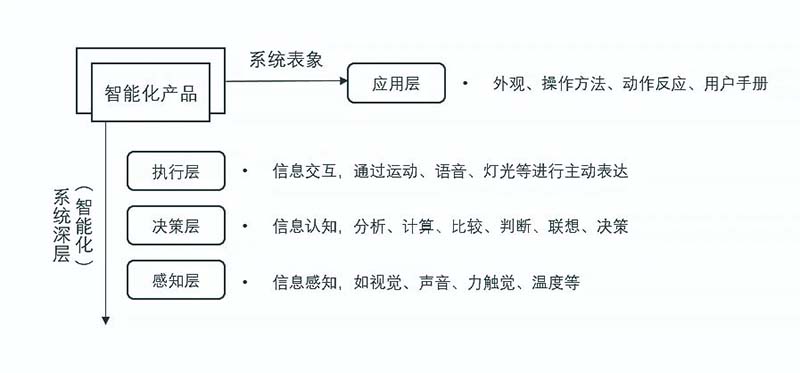

Establishing a deep technical layered design for intelligent product design represented by visual technology can effectively supplement the shortcomings of classic product design psychology models in guiding the practical methods of intelligent product design. Therefore, based on this, the author proposes an intelligent product design psychology model IDUPM (Intelligent Design User Product Model). Among them, the intelligent part is shown in Figure 8. Some scholars have summarized the characteristics of intelligence: the ability to perceive the external world and obtain external information, which is a prerequisite and necessary condition for generating intelligent activities; Capable of storing perceived external information and knowledge generated by thinking, while utilizing existing knowledge to analyze, calculate, compare, judge, associate, and make decisions on information; Being able to continuously learn and accumulate knowledge through interaction with the environment, enabling oneself to adapt to environmental changes; Capable of responding to external stimuli, making decisions, and conveying corresponding information. [8] Due to the close correlation between the characteristics of intelligence and the development of artificial intelligence technology, which almost involves the latest research and theoretical innovation in various fields such as chip semiconductors, computer algorithms, optical sensing, and new material chemistry, higher requirements are put forward for designers of intelligent products to understand and transform interdisciplinary knowledge.

Figure 8. IDUPM Intelligent Product Design Psychology Model

IDUPM requires designers to further understand and focus on the deep level of intelligence in the system (product), guide designers to explore the profound significance of intelligent technology, and better feedback it in communication with user models through analyzing and utilizing innovations in algorithms, sensors, interactive behavior, and other aspects. This is consistent with the classic product design psychology model. When designing intelligent products, IDUPM focuses more on the deep characteristics of the system (product) and has operability: mapping the inherent attributes of intelligence to the behavior of the system (product), and then guiding communication with user models and re optimization with design models, forming an "intelligent agent" feedback mechanism similar to biological individuals.

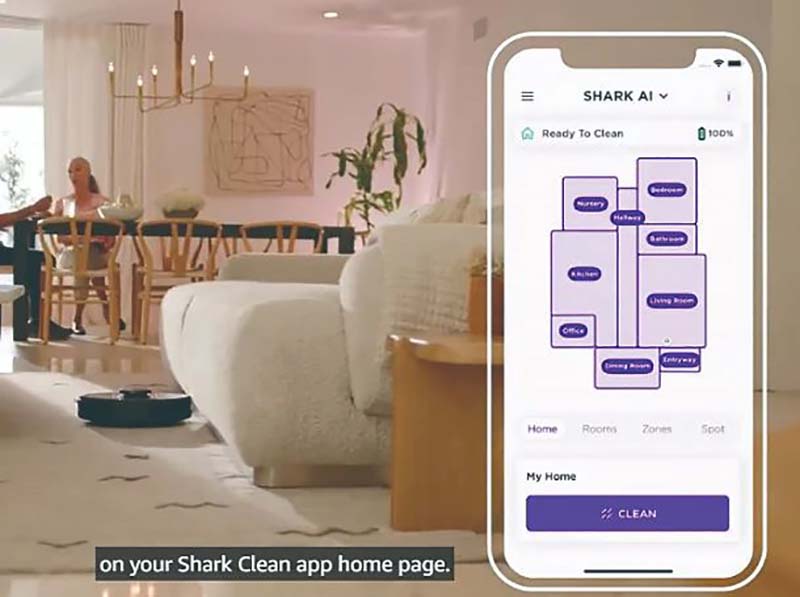

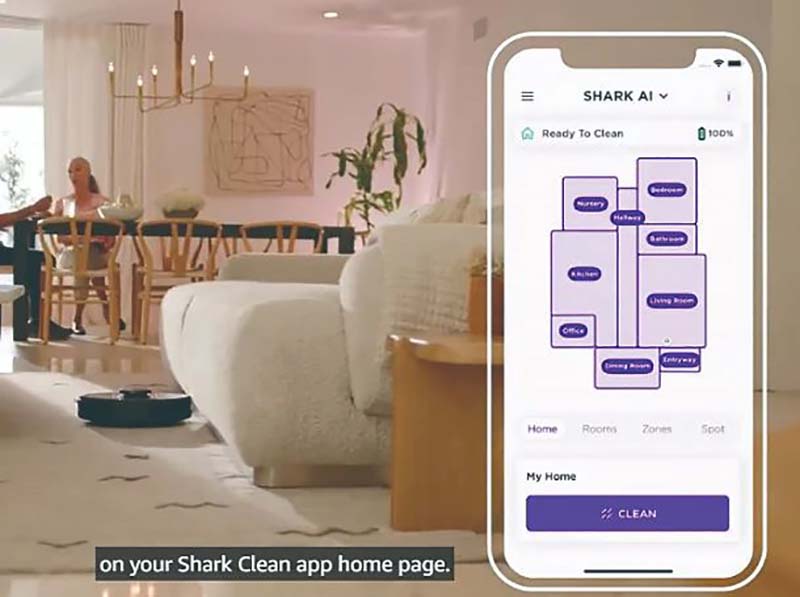

The author used the IDUPM design model in the research and product development of household sweeping robots. The map construction and visual positioning functions obtained through visual technology are designed and packaged into an independent module embedded in the robot through a combination of cameras and computing chips. By collecting visual information through the perception layer and transmitting it through the decision-making layer for comparison, analysis, and calculation, the position and movement information of the sweeping robot during the working process can be obtained. Then, corresponding path planning and obstacle avoidance methods can be designed at the decision-making layer to help the robot autonomously plan and clean the home environment. In addition, the robot's "map" information obtained through visual technology is visualized on the mobile end to provide real-time feedback on its working status and home map on the user's networked smart devices. Users can also use networked smart devices to reverse influence and control the robot's working mode, task type, etc., increasing the interaction between users and the robot. The IQ series household sweeping robots of Shark Ninja brand are directly transformed based on visual technology achievements under the above design method. Once launched, this product was awarded the title of the most popular electronic product among consumers of the year, with a sales scale of over 2 million units per product. IDUPM is an effective supplement to the classic product design psychology model in intelligent product design, with clear transformation paths and method guidelines.

Figure 9. Shark Ninja IQ series household sweeping robot

5 User needs and value creation: the productization path of intelligent technology

For the research of intelligent product design, exploring the deep intelligent characteristics of the system and utilizing them is not the purpose of product design, but as an inspiring intelligent product design method that the author summarizes and supplements through previous intelligent product design. Whether it is traditional product design or intelligent product design, it should always revolve around "user needs" and "value creation", otherwise there will be a "deformity" phenomenon of "designing for the sake of design" and products.

1. Meeting user needs is the original intention of all product designs, that is, the product is useful and usable. The main intention of endowing robots with intelligence is to help users solve problems that they can do but are unwilling to do or cannot do. For example, floor cleaning is a highly demanding household task that used to be completed by family members using certain cleaning tools such as mops and vacuum cleaners. In addition to being time-consuming and labor-intensive, it also gives people an unpleasant psychological experience. Therefore, it is often assigned to family members as a "punitive" task in family labor. The intelligent sweeping robot integrated with visual technology can not only easily achieve global planning, navigation and positioning, efficiently perform cleaning work, meet the needs of users to complete ground cleaning without time or effort, but also provide users with a pleasant experience due to "manipulation" brought by an intelligent product psychologically.

2. Around value creation, designers need to think about the "differences" and "uniqueness" of the value created by intelligent products for users, that is, why must we choose this product? Is the functionality of this product irreplaceable? For example, a driver intelligent assisted driving recorder with visual recognition function, in addition to daily driving data and image recording, a camera located behind the recorder similar to a mobile phone can locate the driver's face, calculate the precise opening of the eyes, judge the state of the human eye, and achieve a high level of driver fatigue detection. [9] When the recorder recognizes that the driver's fatigue level has reached a certain threshold, it will issue an alarm to remind the driver to stop and rest. The fatigue detection function is currently one of the more practical technologies that is easy to implement based on visual cognition. According to public information, in 2021, fatigue driving accounted for 21% of the total number of traffic accidents in China, and the mortality rate of fatigue driving in traffic accidents was as high as 83%. Therefore, the intelligent assisted driving recorder can greatly reduce the accident rate and better protect the driver's life safety by monitoring and detecting driver fatigue. This feature creates the uniqueness and necessity of the value that sets this product apart from general driving recorders. The grasp of "user needs" and "value creation" is the most important productization path that every designer needs to pay attention to when designing intelligent products, because these two ultimately determine whether users are willing to pay for our designed products and how much they are willing to pay for them. This is also an important indicator for measuring whether a new product is accepted by users.

5 Conclusion

Driven by the third wave of artificial intelligence technology, breakthrough technologies and cutting-edge innovative theories have emerged in various fields such as semiconductor chips, computer algorithms, optical sensing, and new material chemistry. With major technology companies and innovative startups empowering these new technologies into traditional products, a new product category called "intelligent products" has emerged. Throughout history, vision has been the most important sensory tool for humans to understand and comprehend the world, as well as an important component of information that drives social progress. This applies equally to the advancement of intelligent technology and products. New products such as smartphones with automatic photo modeling capabilities, payment machines with precise facial recognition capabilities, and household cleaning robots with autonomous recognition and global planning capabilities have been launched one after another. Its natural intelligent attributes also pose higher requirements for designers of intelligent products today - designers need to deeply understand the deep intelligent characteristics of products, combine the characteristics of intelligence in perception, execution, decision-making and other aspects, and establish a psychological model that is more in line with intelligent product design, in order to better communicate with products and users.

It is worth mentioning that in the new wave of artificial intelligence technology, the innovative breakthroughs in visual technology are the most remarkable. The well-known deep learning algorithms and synchronous localization map construction algorithms have been pioneered in the field of visual technology, triggering a new wave of intelligent product design. In July 2017, the State Council issued and implemented the "Development Plan for the New Generation of Artificial Intelligence", which specifically mentioned "vigorously developing the emerging industry of artificial intelligence, accelerating the transformation and application of key artificial intelligence technologies, promoting technology integration and business model innovation, and promoting innovation in key areas of intelligent products". It specifically listed emerging intelligent industry opportunities represented by intelligent software and hardware, intelligent robots, and intelligent terminals. Therefore, the design and innovation of intelligent products are also new development opportunities given to designers by the times.

Although the understanding of intelligence and its relationship with design vary from scholar to scholar in terms of argumentation and entry points, and the transformation path and methods of productization through design research are also different, the original intention and goal are consistent, that is, to provide unique value to users and solve their needs through the design and optimization of products by designers. Anyway, intelligence is only the means and tools used by designers to design better products. Designers' understanding, absorption, mining, and transformation of knowledge in daily life promote product design and innovation. The rapidly evolving intelligent technology represented by visual technology is particularly worthy of the attention of current designers, in order to continuously draw new design inspiration and create better innovative products to meet the growing and diverse needs of users.

Comment: (Swipe up to view)

[1] Hong S, Cho H, Kang B H, et al. Neuromorphic Active Pixel Image Sensor Array for Visual Memory [J] ACS Nano, 2021, 15 (9)

[2] Same as [1].

[3] Zeng X, Tong S, Lu Y, et al. Adaptive Medical Image Deep Color Perception Algorithm [J] IEEE Access, 2020, PP (99): 1-1

[4] Li S, Chi M, Zhang Y, et al. Methods, Apparatus, and Systems for Localization and Mapping [P] US10782137B2, 2020

[5] Zhou Yun, Hu Jinnan, Zhao Yu, Zhu Zhengrong, and Hao Guanwang: "Vehicle Target Tracking Based on Kalman Filter Improved Compressed Perception Algorithm" [J], Journal of Hunan University (Natural Science Edition), June 13, 2022, pp. 1-10.

[6] Li Yanyi et al. Research on Deep Learning Automatic Vehicle Recognition Algorithm Based on RES-YOLO Model [J] Sensors, 2022, 22 (10): 3783-3783

[7] Zhang Z Microsoft Kinect Sensor and Its Effect [J] IEEE Multimedia, 2012, 19 (2): 4-10

[8] Deng Yingna, Zhu Hong, Li Gang, et al.: "Target Recognition Based on Color Invariants and Entropy Maps in Multi Camera Environments" [J], Journal of Computer Aided Design and Graphics, Vol. 6, 2009, p. 5.

[9] Shao Yuchen et al.: "Fatigue detection of motor vehicle drivers using front facing cameras on mobile phones" [J], Signal Processing, Volume 31, Issue 9, 2015, pp. 1138-1144.

Source: Decoration, Issue 9, 2022

Original text: "The Eye of Machines: The Application of Visual Technology in Intelligent Product Design"

Author: Wang Gang, Future Laboratory of Tsinghua University; Chen Zhen (corresponding author), School of Fine Arts, Tsinghua University