1. Universal - solving the contradiction between high demand and low penetration rate of robots

1.1 Robot evolution path: from fixed to mobile, from independent to collaborative, from singular to universal

The premise for the commercialization of service robots is that the product can provide real value, and the judgment of real value depends on whether the robot can be universal. Against the backdrop of global labor shortages, the robotics industry is thriving, with a global service robot market size of 21.7 billion US dollars in 2022 and a compound growth rate of over 20% over the past five years. However, in the context of rapid development, the penetration rate of service robots is still not high, and the large-scale commercial landing is not smooth.

We believe that the reason is that most service robots currently have some degree of adaptability issues, such as inability to adapt to environmental changes. After environmental changes, users cannot achieve scene adaptation through simple operations; Low level of intelligence, unsatisfactory pedestrian obstacle avoidance and functional performance; The deployment process of robots is complex (such as SLAM mapping, target point annotation, etc.), and all deployment operations can only be carried out by on-site deployment engineers of robots. It is difficult for users to operate and participate, and when changes are needed, on-site deployment engineers are still required to operate. Taking the supermarket scene as an example: the environment is complex: hollowed out shelves (ultra-high obstacles), narrow passages, areas prone to falling, low obstacles, and temporary paving in the scene, testing the robot's passability, perception ability, and task planning ability. High dynamism: The mall has a large flow of people, is easy to gather, and has many dynamic obstacles, which requires high safety and obstacle avoidance capabilities of robots. There are many special objects, and the scene lighting changes greatly: such as glass guardrails, escalators, glass revolving doors, glass walls, and other high transparency objects. Most robots are basically unable to recognize them and are prone to interference with LiDAR, leading to robot misjudgment, collisions, falls, and inability to approach operations. For robots that rely on visual sensors, it is difficult to operate stably under normal lighting, darkness, overexposure, and other lighting conditions.

The above issues also exist in the field of industrial robots, affecting the increase in penetration rate of industrial robots until the emergence of collaborative robots. The global collaborative robot market size in 2022 is 8.95 billion yuan, and it is expected that the market size will reach 30 billion yuan at a growth rate of 22.05% from 2022 to 2028. From 2017 to 2022, the sales of collaborative robots in China increased from 3618 units to 19351 units. It is expected that shipments will exceed 25000 units in 2023, and the market size will increase from RMB 360 million to RMB 2.039 billion in 2016-2021, with a compound growth rate of 41.5%. Collaborative robots can also be considered service robots because they aim to fight alongside humans. Traditional industrial robots work separately from humans behind fences, and their completed tasks are also limited, such as welding, spraying, lifting, etc. Collaborative robots are more flexible, intelligent, easy to collaborate, and adaptable, enabling manufacturing industries such as automobiles and electronics to extend automation to final product assembly, complete tasks such as polishing and coating, and quality inspection.

1.2 How to make robots more versatile?

To make robots more versatile, it is necessary to comprehensively improve their perception ability, thinking and decision-making ability, and action execution ability. We believe that the emergence of GPT (Pre trained Big Prophecy Model) and humanoid robots is a big step for robots on the path towards universal artificial intelligence. The ability to perceive the world (robot's eyes): Laser and visual navigation are the mainstream application solutions in the perception and positioning technology of autonomous robot movement. The development of computer vision has gone through traditional visual methods represented by feature descriptors and deep learning techniques represented by CNN convolutional neural networks. Currently, the general visual model is in the research and exploration stage, and the scene of humanoid robots is more common and complex compared to industrial robots. The All in One multi task training scheme of the visual model can make robots better adapt to human life scenes. On the one hand, the strong fitting ability of large models enables humanoid robots to have higher accuracy in tasks such as target recognition, obstacle avoidance, 3D reconstruction, and semantic segmentation; On the other hand, large models solve the problem of deep learning techniques overly relying on single task data distribution and poor scene generalization performance. General visual large models learn more general knowledge through a large amount of data and transfer it to downstream tasks. Pre trained models based on massive data have good knowledge completeness, improving scene generalization performance.

The ability to think and make decisions (the brain of robots): Currently, robots are specialized robots that can only be applied in limited scenarios. Even if the robot grasps, based on computer vision, it is still in limited scenarios. Algorithms are only used to recognize objects, and how and what to do still requires human definition. To make the robot universal, ask him to water the flowers, and he will know how to get a kettle, collect water, and then water the flowers. This is something that requires common sense to accomplish. How can robots have common sense? Before the emergence of large models, this problem was almost unsolvable. The large model allows robots to have common sense, thus possessing universality to complete various tasks, completely changing the implementation mode of general-purpose robots.

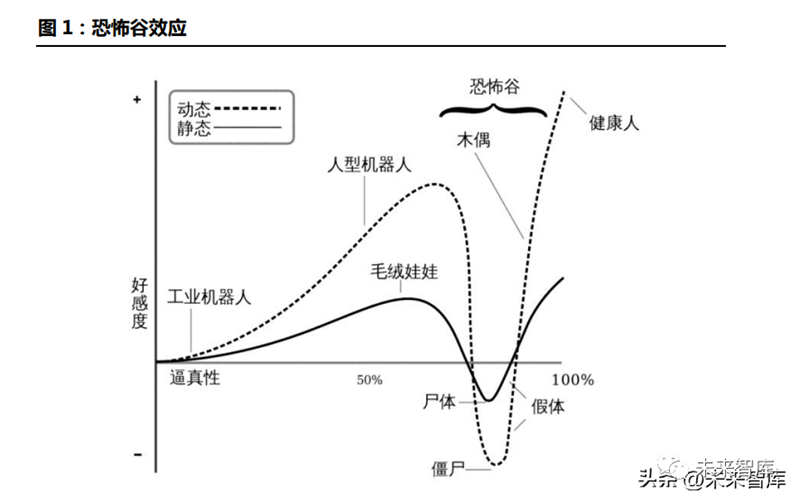

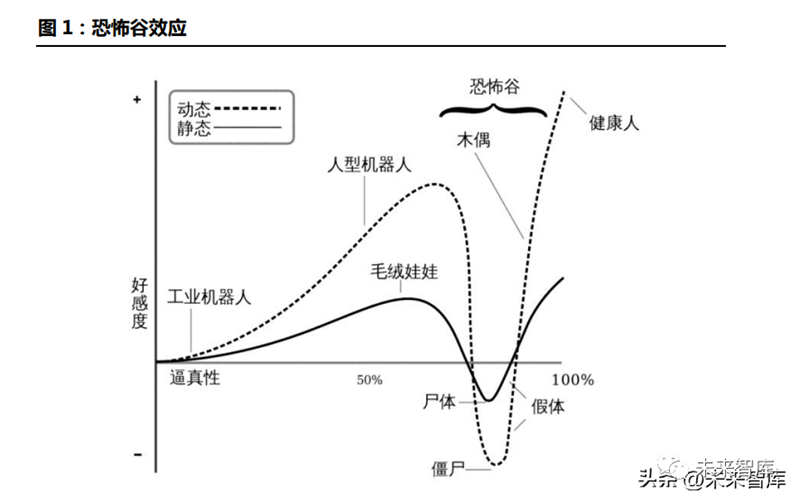

The adaptability of human tools to the environment eliminates the need to build tools for robots. Execution ability (limbs of the robot): Movement ability (legs)+Fine operation (hands). Making robots humanoid is to make their execution abilities more versatile. The environment in which robots perform tasks is built according to the human body shape: buildings, roads, facilities, tools, etc. This world is designed for the convenience of humanoid creatures like humans. If a new form of robot appears, people must redesign a new environment that the robot can adapt to. Designing robots to perform tasks within a specific range is relatively easy, and if you want to improve the versatility of robots, you must choose humanoid robots that can serve as clones. In addition, humans and humanoid robots are more likely to have emotional communication, and humanoid robots make people feel close. Japanese robotics expert Hiroshi Mori's hypothesis suggests that due to the similarity in appearance and actions between robots and humans, humans also develop positive emotions towards robots.

1.3 The eve of commercialization of humanoid robots

From the DARPA Robotics Challenge in 2015 to the cutting of various research projects for humanoid robots in 2019, which was widely criticized in the industry, and then to the flourishing of Tesla in 2022, the humanoid robot industry is in a spiral upward development. Boston Power's Atlas, Tesla's Optimus, Xiaomi CyberOne, ihmc's Nadia, Agility Robotics' Nadia, Japanese Asimo, and HRP-5P are all exploring the commercial form of humanoid robots. We have sorted out representative products in the development process of humanoid robots: the first humanoid robot WABOT-1 (1973). In 1973, Ichiro Kato from Waseda University in Japan led a team to develop the world's first humanoid intelligent robot, the WABOT-1. The robot has a limb control system, a vision system, and a dialogue system. It is equipped with two cameras on the chest and tactile sensors on the hands.

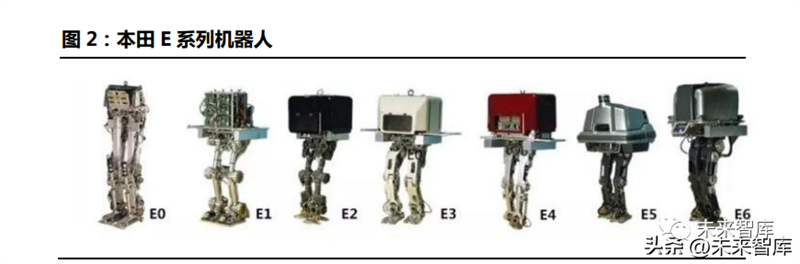

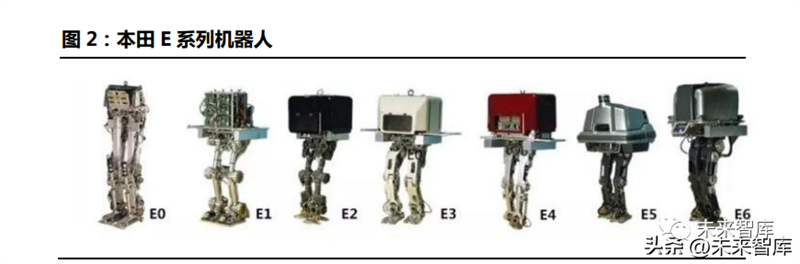

The Honda E-series robots (1986-1993) laid the foundation for stable walking. Honda has launched the E-series bipedal robots, from E0 to E6, which can increase walking speed from slow to fast. They can achieve stable walking from walking in a straight line to on steps or slopes, laying the foundation for the development of the next P-series humanoid robots and marking a milestone in robot history. Honda P-series robots (1993-1997)&ASIMO (2000-2011). In 1993, Honda developed its first humanoid robot prototype, the P4, which was the fourth and last robot in the 2000 P series and commonly known as ASIMO. The third generation ASIMO, launched in 2011, is 1.3 meters tall, weighs 48 kilograms, and has a walking speed of 0-9 km/h. The latest version of ASIMO in 2012 not only has walking function and various human body movements, but also can pre-set movements and make corresponding movements based on human voice, gesture and other commands. He also possesses basic memory and recognition abilities. In 2018, Honda announced the cessation of research and development on the humanoid robot ASIMO, focusing on more practical applications of this technology.

The HPR series robots (1998-2018) replaced the heavy workload of the construction industry: This is a development project for a universal home assistant robot sponsored by the Japanese Ministry of Economy, Trade and Industry and the New Energy and Industrial Technology Development Organization, led by Kawada Industries and jointly developed with the National Institute of Advanced Industrial Science and Technology (AIST) and Kawasaki Heavy Industries. The project started with HPR-1 (Honda P3) in 1998, and multiple humanoid robots such as HPR-2P, HRP-2, HRP-3P, HRP-3, HRP-4C, and HRP-4 were launched successively. The latest robot, HPR-5P, was released in 2018. The robot is 182cm tall, weighs 101kg, and has a total of 37 degrees of freedom throughout its body, aiming to replace heavy work in the construction industry.

Boston Dynamics (1986~2023): Legged robot operation and control technology is at the forefront, with obvious military application characteristics. Boston Dynamics was first world-renowned for its development of Big Dog, and the company has released multiple robots including BigDog, Rise, LittleDog, PETMAN, LS3, Spot, Handle, Atlas, etc., ranging from single legged and multi legged robots to humanoid robots, with obvious military application characteristics. Boston Dynamics is a typical technology driven company that continuously iterates and updates robots in terms of mechanical structure, algorithmic gait control, and power system energy consumption. The core is to develop legged robots to adapt to different environments, and the technical key lies in dynamic research and control of robot equilibrium.

Digit series robots (2019-2023): equipped with walking ability, focusing on commercialization in the logistics field. The Digit series is Agility Robotics' attempt to commercialize in the logistics field. The company is a robotics company split from Oregon State University (OSU), dedicated to the research and manufacturing of bipedal robots, and has developed the MABEL, ATRIAS, CASSIE, and DIGIT series of bipedal robots. Among them, CASSIE can achieve an astonishing speed of 4m/s, which is a milestone achievement in the fast walking ability of legged robots. In 2019, Agility launched the humanoid robot Digit, which added a torso and arms to Cassie and added more computing power. It supports boxes with a load of 18kg and can perform tasks such as moving packages and unloading.

Xiaomi's "Iron Big" Robot (2022): In 2021, Xiaomi released a mechanical dog Cyberdog, which was its first attempt at legged robots. In August 2022, Xiaomi's first full-size humanoid bionic robot CyberOne made its debut at the autumn launch event. CyberOne is 177cm tall, weighs 52kg, and has the art name "Iron Big". It can perceive 45 types of human semantic emotions and distinguish 85 environmental semantics; Equipped with Xiaomi's self-developed full body control algorithm, it can coordinate movements of 21 joints; Equipped with the Mi Sense visual space system, it can reconstruct the real world in three dimensions; Driven by 5 types of joints throughout the body, with a peak torque of 300Nm.

Tesla Optimus Robotics (2022): Promoting the commercialization of humanoid robots. The Optimus prototype was unveiled on Tesla's AI day in 2022, with a height of 1.72m, weight of 57kg, a load capacity of 20kg, and a maximum sports speed of 8km/h. At present, Optimus is still making rapid progress in research and development, and in just 8 months, the robot has been able to perform complex actions such as upright walking, handling, and watering.

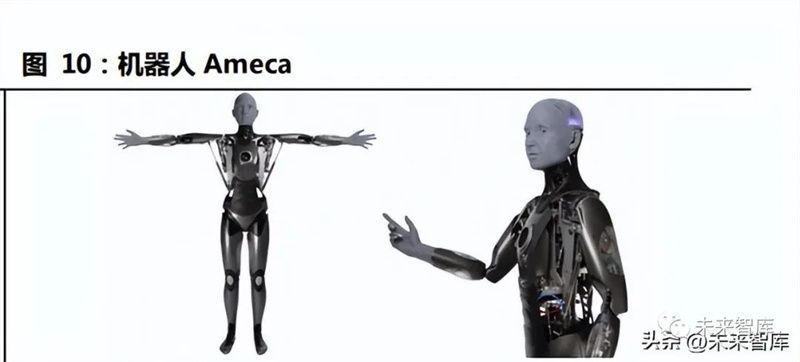

Interactive robots Sophia (2015) and Ameca (2021) attempt to anthropomorphize facial expressions: Sophia is a humanoid robot developed by Hanson Robotics and was launched in 2015. Sophia's skin is made of Frubber biomimetic material and is based on speech recognition and computer vision technology. It can recognize and replicate various human facial expressions, and converse with humans by analyzing human expressions and language. Ameca is created by Engineering Arts, a leading British company in the design and manufacturing of biomimetic entertainment robots. It features 12 new facial actuators that have been upgraded with facial expressions, allowing for blinking, pursing, frowning, and smiling in front of a mirror. Ameca can freely perform dozens of humanoid body movements and is considered the "most realistic robot in the world.".

2. AI big model+humanoid robot: providing common sense for robots

2.1 The Training Process and Development Trends of AI Large Models

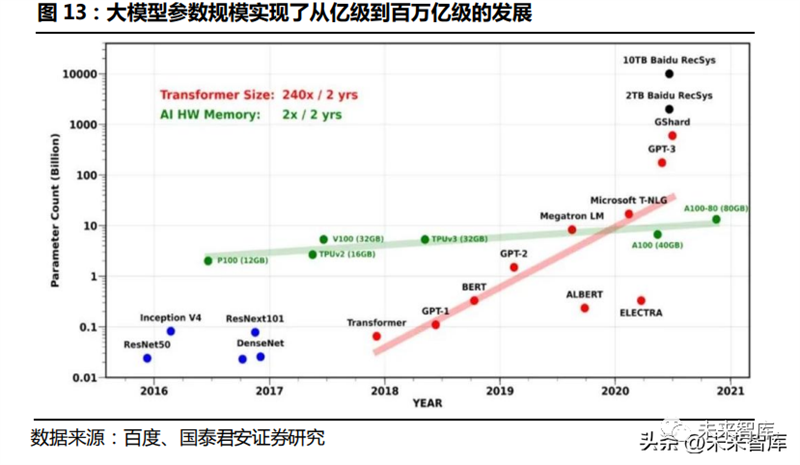

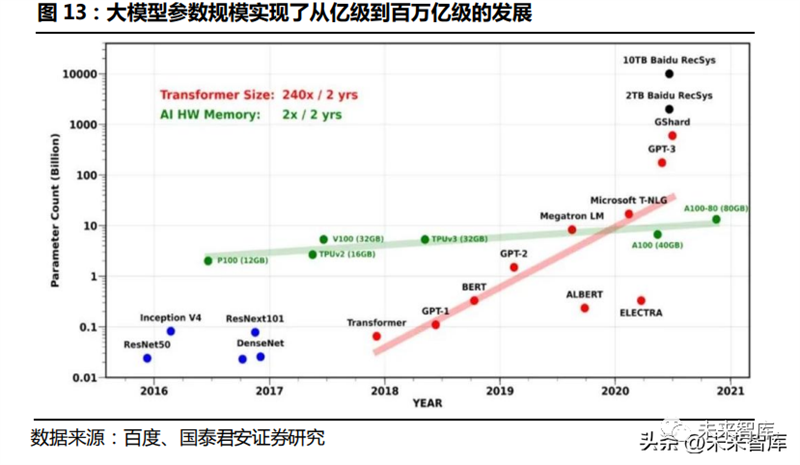

Large model=pre training+fine-tuning. Starting from Transformer in 2017, with the emergence of GPT-1, BERT, GPT2, GPT-3, and GPT-4 models, the parameter level of models has achieved a breakthrough from billions to millions of billions. Large models (pre trained models, Foundation models) are pre trained on unlabeled data and fine tuned using specialized small-scale annotated data, which can be used for downstream task prediction. Transfer learning is the main idea of pre training models. When the target scene data is insufficient, an AI model based on deep neural networks is first trained on a publicly available dataset with a large amount of data. Then, it is transferred to the target scene and fine tuned using a small dataset in the target scene to achieve the required performance. The pre trained model greatly reduces the need for the model to work downstream in the amount of labeled data, making it suitable for scenarios where it is difficult to obtain a large amount of labeled data.

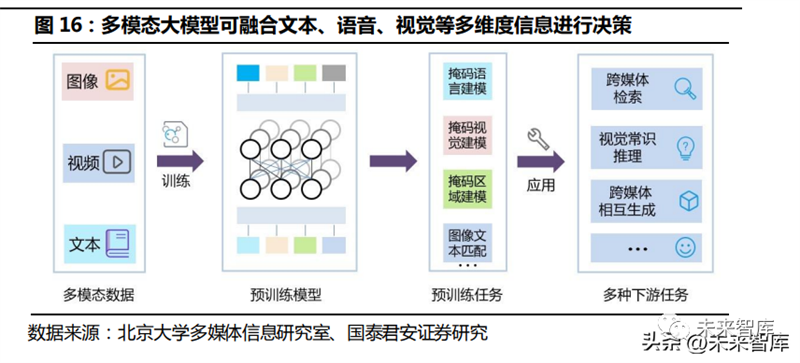

The development process and trend of large models: From the perspective of parameter scale, large models have gone through stages from pre trained models, large-scale pre trained models, and ultra large scale pre trained models, with parameter quantities achieving development from billions to billions. From the perspective of data mode, the big model is developing from the single mode big model such as text, voice and vision to the general AI direction of multi mode integration.

2.2 The AI big model enables humanoid robots to have universal task solving capabilities

The AI big model will integrate with humanoid robots from various aspects such as speech, vision, decision-making, and control, forming a closed-loop system of perception, decision-making, and control, greatly improving the "intelligence" of robots. Speech: ChatGPT, as a pre trained language model, can be applied to natural language interaction between robots and humans. For example, robots can understand human natural language instructions through ChatGPT and take corresponding actions based on the instructions. Natural language is the most common medium of interaction for humans, and speech, as the carrier of natural language, will be a key task for robots to anthropomorphize. Although the emergence of deep learning has pushed speech interaction technology, which is composed of speech recognition technology, natural language processing, and speech generation technology, to a relatively mature stage, in the actual process, it is still easy to encounter semantic understanding bias (irony, etc.), insufficient multi round dialogue ability, and stiff text. The language model provides a solution to the problem of autonomous speech interaction for robots. In general language tasks such as context understanding, multilingual recognition, multi round dialogue, emotion recognition, and fuzzy semantic recognition, ChatGPT has demonstrated comprehension and language generation abilities that are no less than those of humans. With the support of large models represented by ChatGPT, the understanding and interaction of universal language for humanoid robots can be put on the agenda, which will be the beginning of empowering universal service robots with universal AI.

Vision: The large visual model empowers humanoid robots to recognize more accurately and create more versatile scenes. The development of computer vision has gone through traditional visual methods represented by feature descriptors and deep learning techniques represented by CNN convolutional neural networks. Currently, the general visual models are in the research and exploration stage. On the one hand, the strong fitting ability of large parameter models enables humanoid robots to have higher accuracy in tasks such as target recognition, obstacle avoidance, 3D reconstruction, and semantic segmentation; On the other hand, the universal big model solves the problem of deep learning techniques represented by convolutional neural networks overly relying on single task data distribution and poor scene generalization effect in the past. The universal visual big model learns more general knowledge through a large amount of data and transfers it to downstream tasks. The pre trained model based on massive data has good knowledge completeness, greatly improving the scene generalization effect. The scene of humanoid robots is more universal and complex compared to industrial robots, and the multi task training scheme of All in One, a visual large model, can make robots better adapt to human life scenes.

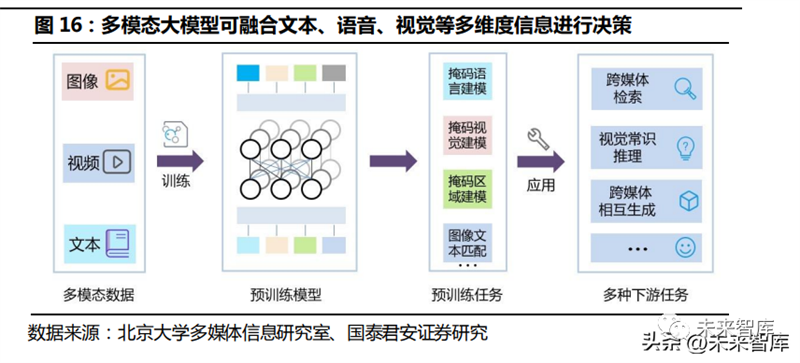

Decision making: Universal language and environmental awareness are the foundation of automated decision-making, and multimodal large models fit the decision-making needs of humanoid robots. Single mode intelligence cannot solve the decision-making problem of designing multimodal information, such as the task of "voice telling the robot to fetch the green apple on the table". The purpose of multimodal unified modeling is to enhance the cross modal semantic alignment ability of the model, gradually standardize the model, and enable robots to integrate multi-dimensional information such as vision, speech, and text, achieving the ability of sensory fusion decision-making. Based on multimodal pre trained large models, they may become artificial intelligence infrastructure, enhancing the diversity and universality of tasks that robots can complete, making them not limited to single parts such as text and images, but compatible with multiple applications, expanding single intelligence into fusion intelligence, and enabling robots to combine their perception of multimodal data to achieve automated decision-making.

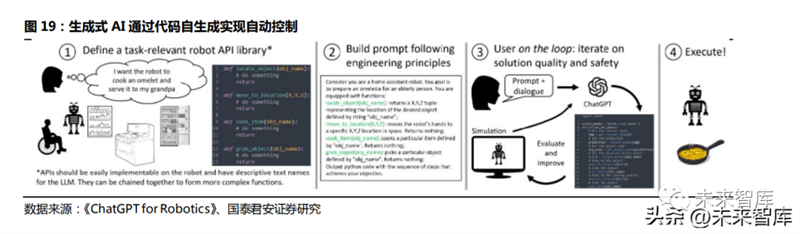

Control: Generative AI empowers robots with self-control, ultimately forming a perception, decision-making, and control loop. To enable humanoid robots to have universal abilities, it is first necessary for them to possess "common sense", namely universal language comprehension ability (speech) and scene comprehension ability (vision); Secondly, it needs to have decision-making ability, that is, the ability to decompose tasks generated after receiving instructions; Finally, it is necessary for the robot to have self-control and execution performance. The code generation ability of generative AI will ultimately enable the robot's perception, decision-making, and action to form a closed loop, achieving the goal of self-control. In fact, the Microsoft team has recently attempted to apply ChatGPT to robot control scenarios. By writing the underlying function library of the robot in advance and describing its functionality and objectives, ChatGPT can generate code to complete tasks. Driven by generative AI, the threshold for robot programming will gradually decrease, ultimately achieving self programming, self-control, and completing common tasks that humans are accustomed to.

2.3 OpenAI and Microsoft Applying Big Language Models to Robots

OpenAI leads investment in Norwegian humanoid robot company 1X Technologies. In 2017, OpenAI launched the open-source software Roboschool for robots, deploying a new single sample imitation learning algorithm in robots to demonstrate how humans perform tasks in VR. In 2018, OpenAI released 8 simulated robot stages and post experience follow-up baseline implementations, which were used to train models working on physical robots. In 2022, Halodi Robotics tested the medical assistant robot EVE at Sunnaas Hospital in Norway to perform logistical tasks. On March 28, 2023, OpenAI led the investment in 1X Technologies (formerly known as Halodi Robotics), a Norwegian humanoid robot company. Halodi Robotics plans to use Ansys simulation software to develop humanoid robots that can collaborate safely with humans in daily scenarios through the Ansys startup company.

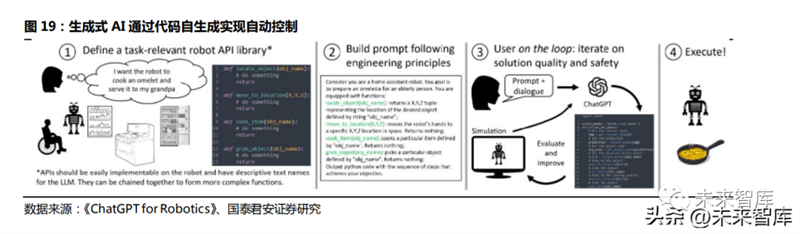

Microsoft proposes ChatGPT for Robotics, using ChatGPT to solve the problem of robot application programming. In April 2023, Microsoft published a paper on its official website titled "ChatGPT for Robotics: Design Principles and Model Abilities". The goal of this study was to observe whether ChatGPT can transcend textual thinking and reason about the physical world to assist in completing robot tasks. Currently, humans still heavily rely on handwritten code to control robots, and the team has been exploring how to change this reality by using OpenAI's new artificial intelligence language model, ChatGPT, to achieve natural human-computer interaction.

Humans can transition from being in the loop in robot processes to being on the loop. The paper proposes that LLM is not required to output code specific to robot platforms or libraries, but rather to create a simple high-level function library for ChatGPT to call, and to link the high-level function library to existing libraries and APIs of various platforms, scenarios, and tools in the backend. The results have shown that the introduction of ChatGPT enables humans to interact with language models through high-level language commands such as natural language. Users continuously input human perceptual information into ChatGPT through text dialogue, and ChatGPT parses the observation flow and outputs relevant operations in the dialogue system without the need for code generation. In this way, humans can achieve seamless deployment of various platforms and tasks, and evaluate the quality and security of ChatGPT output. The main tasks of humans in the robot pipeline are: 1) Firstly, define a set of advanced robot APIs or function libraries. This library can be designed for specific types of robots and should be mapped from the robot's control stack or perception library to existing low-level specific implementations. It is important to use descriptive names for advanced APIs so that ChatGPT can infer their behavior. 2) Write a text prompt for ChatGPT that describes the task objectives while clearly stating which functions in the advanced library are available. Hints can also include information about task constraints, or how ChatGPT should organize its answers, including using specific programming languages or using auxiliary parsing components. 3) Users evaluate the code output of ChatGPT by directly checking or using emulators. If necessary, users can provide feedback on answer quality and security to ChatGPT using natural language. 4) When users are satisfied with the solution, they can deploy the final code to the robot.

ChatGPT can solve simple robot tasks in a zero shot manner. For simple robot tasks, users only need to provide text prompts and function library descriptions, without providing specific code examples. ChatGPT can solve problems such as zero shot spatiotemporal reasoning (ChatGPT controls a planar robot and uses visual servos to capture basketball positions), controlling real drones to search for objects, and manipulating virtual drones to achieve industrial detection.

Under the interaction of human users on the loop, ChatGPT can complete more complex robot control tasks. 1) Course learning: Teach ChatGPT simple skills for picking and placing objects, and apply the learned skills in logical combinations for more complex block arrangement tasks; 2) Airsim obstacle avoidance: ChatGPT has built most of the key modules of the obstacle avoidance algorithm, but requires manual feedback on drone orientation and other information. By manually providing advanced natural language feedback, ChatGPT is able to understand and make code corrections in appropriate locations.

ChatGPT's dialogue system is capable of parsing observations and outputting relevant operations. 1) Closed loop object navigation with API: Provides access to computer vision models for ChatGPT as part of its function library. ChatGPT constructs a perception action loop in its code output to estimate relative object angles, explore unknown environments, and navigate to user specified objects. 2) It uses ChatGPT's dialogue system for closed-loop visual language navigation. In a simulated scenario, human users input new state observations as dialogue text, and ChatGPT's output only returns forward motion distance and turning angle, achieving the use of a "dialogue system" to guide robots step by step to navigate to areas of interest.

3. Humanoid shape makes robot motion execution more versatile

Execution ability (limbs of the robot): Movement ability (legs)+Fine operation (hands). Transforming robots into humanoid shapes is to make their execution abilities more versatile. The environment in which robots perform tasks is built according to the human body shape: buildings, roads, facilities, tools, etc. This world is designed for the convenience of humanoid creatures like humans. If a new form of robot appears, people must redesign a new environment that the robot can adapt to. Designing robots to perform tasks within a specific range is relatively easy, and if you want to improve the versatility of robots, you must choose humanoid robots that can serve as clones. In this chapter, two representative products, Boston Power Altas and Tesla Optimus, are selected to compare their differences in driving, environmental perception, and motion control, exploring the commercialization trend of humanoid robot motion control solutions.

Boston Power Altas is positioned as a forward-looking research in technology, focusing on exploring the possibilities of technological applications rather than commercialization. From the perspective of hardware architecture, Altas has excellent dynamic performance, instantaneous power density, and stable motion posture, which can achieve high load and high complexity movements, like a technology driven feast. Commercialization is not currently the main consideration factor for Boston Dynamics. The Altas project serves more as a research platform for researchers to conduct academic experiments, focusing on exploring the possibilities of technological applications rather than commercialization. Tesla Optimus is committed to the scale, commercialization, and standardization of humanoid robots. Driven by the goal of commercialization, cost and energy consumption have become considerations for the Tesla team.

3.1 Drive: hydraulic drive vs electric drive

3.1.1 Electric drive has low cost, easy maintenance, high control accuracy, and high commercial potential

The mainstream driving solutions for humanoid robots include hydraulic drive and electrical drive (servo motor+reducer). Compared to electrical drive, hydraulic drive has a higher output torque, higher power density, and stronger overload capacity, which can meet the high load action and fast motion requirements of Boston Power Atlas; However, the hydraulic drive method has high energy consumption, high cost, and is prone to problems such as leakage and poor maintainability. On the one hand, high load actions in commercial scenarios (such as parkour, backflip, etc.) are considered non essential behaviors. On the other hand, with the continuous improvement of power density and response speed of electric drive systems, we believe that combining the advantages of low cost, easy maintenance, and mature technological applications of electric drive, the commercialization possibility of humanoid robots based on electric drive is higher.

3.1.2 Boston Power Atlas: Adopting a "Hydraulic Drive" Solution

Boston Power has a total of 28 hydraulic actuators throughout its body, which can perform complex actions under high loads. HPU (Hydraulic Power Unit), as the hydraulic power source of Atlas, has a very small size and high energy density (~5kW/5Kg). The electro-hydraulic system is connected to various hydraulic pumps through fluid pipelines, achieving fast response and precise force control. Its high instantaneous power density hydraulic driver can support complex actions such as running, jumping, and flipping for robots. The structural strength of the robot benefits from its highly integrated structural assembly. Based on official disclosed images and patent details, we speculate that the ankle, knee, and elbow joints are driven by hydraulic cylinders; The hip, shoulder, wrist, and waist are driven by a swinging hydraulic cylinder.

3.1.3 Tesla Optimus: Adopting an "Electric Drive" Solution

A single Optimus has 40 actuators throughout its body, which is 6-7 times that of a single multi joint robot. Among them, the joint part of the body adopts a transmission method of reducer/screw+servo motor, with a total of 28 actuators; The robotic arm is based on an underactuated scheme and adopts a transmission structure of motor+tendon drive, with 6 motors and 11 degrees of freedom for a single hand.

According to Testla AI Day, among the six independently developed actuators by Tesla, the rotary joint scheme inherits the industrial robot, while linear actuators and micro servo motors are new requirements for humanoid robots. Please refer to:

Rotating joint scheme (shoulder, hip, waist and abdomen): servo motor+reducer. We speculate that a single humanoid robot will be equipped with 6 RV reducers (hip, waist and abdomen) and 8 harmonic reducers (shoulder, wrist). According to the Tesla Optimus actuator scheme, the RV reducer has a large volume, strong load capacity, and high stiffness, suitable for hip and waist abdominal high load joints. Among them, there are 2 hip joints and 2 waist abdominal two degrees of freedom, totaling 6 units; Harmonic reducers have small volume, high transmission ratio, and high precision, suitable for shoulder and wrist joints. Among them, there are 3 * 2 units for shoulder joints and 1 * 2 units for wrist joints, totaling 8 units. With the influx of more manufacturers, there may be differences in their actuator solutions. If linear actuators are replaced by rotary actuators, the number of single robot reducers will increase.

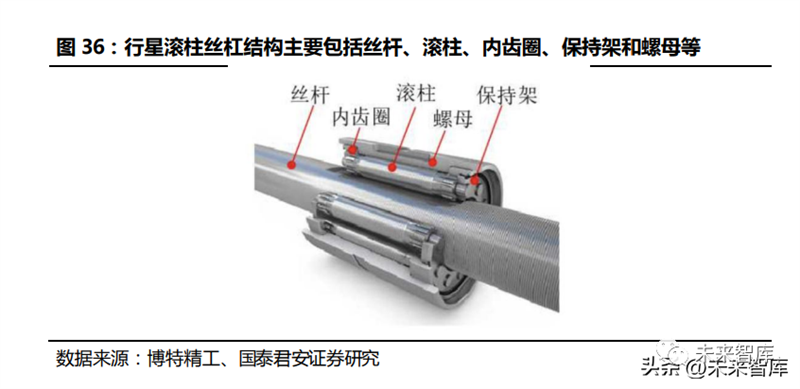

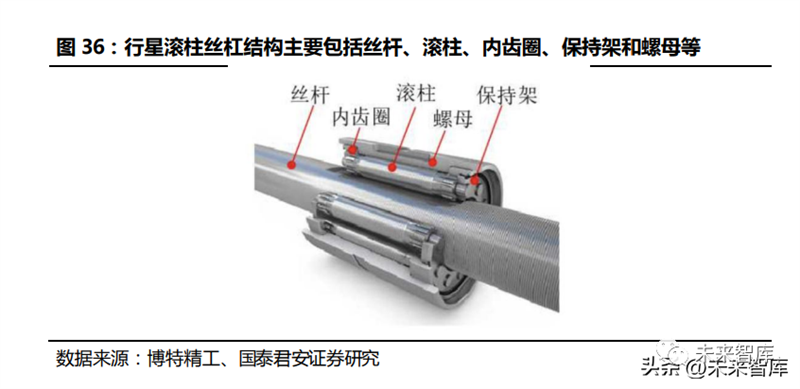

Joint with small swing angle (knee, elbow, ankle, wrist): linear actuator (servo motor+lead screw). The integrated servo electric cylinder (servo motor+screw) scheme has self-locking ability and lower energy consumption than the pure rotary joint scheme. Linear actuators have high space utilization and can provide significant driving force. We speculate that the linear actuator based on torque motor combined with planetary roller screw scheme will be applied to the joints of linear actuators (hip, knee, ankle, elbow, wrist), and it is expected to use a total of 14 linear actuators.

The planetary roller screw, with its high load-bearing capacity, high stiffness, and long lifespan, may become a key transmission device for linear actuators of humanoid robots. Adapting to the needs of humanoid robots to achieve cost reduction is a prerequisite for large-scale production. According to the information presented at the Tesla AI Day 2022 meeting, the Optimus linear actuator adopts a planetary roller screw integrated servo electric cylinder. We believe that the possibility of using high load-bearing and high stiffness planetary roller screw as the transmission device for the servo electric cylinder of the lower limb hip, knee, ankle joint, and upper limb elbow joint is relatively high. The planetary roller screw has a complex structure and high processing difficulty, resulting in high costs. Adjusting the design and process plan to meet the needs of humanoid robots is a prerequisite for its large-scale application to achieve cost reduction.

Robot: Optimus single arm includes 6 actuators, which can achieve 11 degrees of freedom. It is driven by micro motors, and the "underactuated" scheme has high cost-effectiveness. The "rope driven" transmission structure has greater uncertainty. "Under actuated" means that the number of system actuators is smaller than its number of degrees of freedom. Due to the inherent high degree of freedom of the robot, in order to improve the integration, compactness, and cost reduction of system design, and to simplify subsequent motion control, designers will reduce the number of motors used (i.e. the number of actuators), forming an underactuated scheme where the number of actuators is smaller than its number of degrees of freedom. Optimizing the mechanical structure to drive more degrees of freedom with fewer actuators and save costs is currently the mainstream choice for commercial products and university robotic arm research and development.

There are significant differences in the driving schemes of robotic arms, and the key lies in the lightweight and low cost of the motor. In terms of mechanical transmission structure, the mainstream solutions for robotic arms include Tendon Drive, connecting rods, gears and racks, and material deformation. There are significant differences in the driving schemes of different robotic arms: Ritsumeikan Hand achieves the driving of 15 joints with 2 drivers through coupled wiring; Stanford/JPL dexterous hand with 16 motors per hand; Shadow Hand has 30 motors for one hand, totaling 24 degrees of freedom. The humanoid robot manipulator needs to meet the requirements of light weight, compact structure, and strong gripping force, so the motor should have the characteristics of small size, light weight, high accuracy, and large torque. The hollow cup motor has a compact structure, high energy density, low energy consumption, and high compatibility with the requirements of humanoid robot manipulators.

The Tesla Optimus robotic arm adopts a motor+tendon rope drive method, which may optimize the hand transmission scheme. Although rope drive brings great flexibility to robotic arms and can greatly simplify design difficulty and system complexity, its reliability and transmission efficiency are lower than traditional linkage, gear rack and other methods, which may be a temporary solution for the R&D team's short-term development.

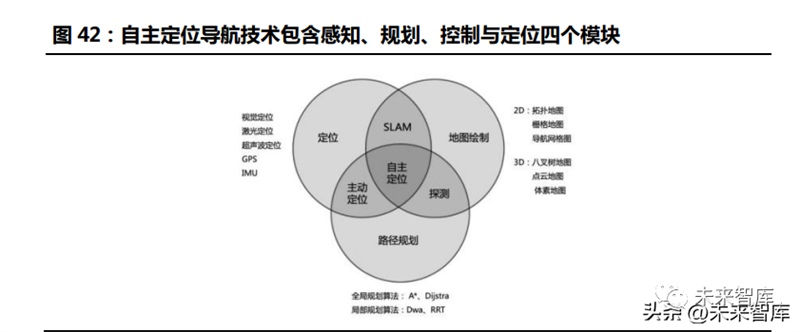

3.2 Environmental perception: depth camera+LiDAR VS pure vision solution

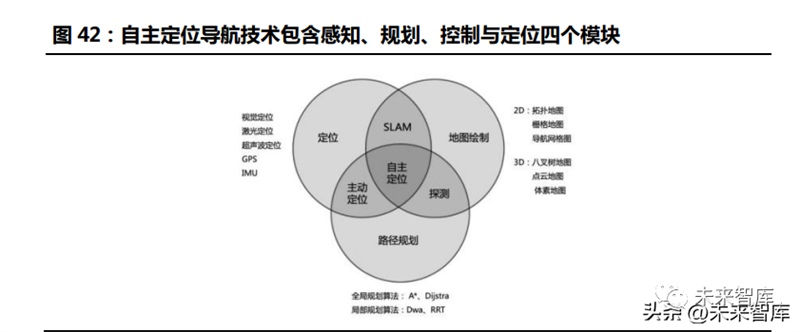

The principle of perception and positioning technology used to realize robot autonomous movement mainly includes vision, laser, ultrasonic, GPS, IMU, etc., corresponding to different sensor categories of robot perception system. SLAM (Real time Positioning and Map Building) is a mature and widely used positioning technology. It is a system for robots to collect and calculate various sensor data, generate positioning and scene map information for their own position and posture. The SLAM problem can be described as a robot starting from an unknown position in an unknown environment, positioning itself based on position estimation and sensor data during its movement, and constructing an incremental map. After obtaining the positioning and map, autonomous movement is achieved based on path planning algorithms (global, local, obstacle avoidance).

3.2.1 Boston Power Atlas: depth camera+LiDAR

The Boston Dynamics Atlas perception scheme integrates depth cameras and LiDAR, and implements gait planning based on multi plane segmentation algorithms. The development of Atlas robot perception and vision technology is relatively mature. It draws on the Google Transformer model, builds the HydraNet neural network model, optimizes vision algorithms, and completes the transfer of pure vision systems for autonomous driving; Atlas uses a ToF depth camera to generate a point cloud at a frequency of 15 frames per second. Based on a multi plane segmentation algorithm, the environmental surface is extracted from the point cloud, and the data is mapped to complete the recognition of surrounding objects. Afterwards, the industrial computer performs gait planning based on the recognized surface and object information to achieve tasks such as obstacle avoidance, detecting ground conditions, and cruising. IHMC, also known as the Institute of Human and Machine Cognition, is a top institution dedicated to developing robot control algorithms. Its main focus is on developing key algorithms for humanoid robot walking, and the algorithms for commanding Atlas robots to stand and walk are derived from IHMC.

3.2.2 Tesla Optimus: Pure visual solution, lower cost

Tesla Optimus environment awareness adopts a camera based pure vision scheme, and it is cheaper to transplant Tesla full auto drive system. The Optimus head is equipped with three cameras (fisheye camera+left and right cameras), which achieve environmental perception through panoramic segmentation and self-developed 3D reconstruction algorithm (Occupancy Network). The pure visual solution has lower costs compared to perception devices such as LiDAR, but requires high computing power. The robot inherits the Autopilot algorithm framework and trains a neural network suitable for the robot by re collecting data to achieve three-dimensional reconstruction of the environment, path planning, autonomous navigation, dynamic interaction, etc. The transplantation of Tesla's powerful full auto drive system (FSD) makes the robot vision scheme more accurate and intelligent without increasing the hardware cost.

3.3 Motion control: No universal controller solution has yet been formed

The operation control algorithm is the core competitiveness, and each humanoid robot control algorithm is self-developed. Humanoid robots require high levels of motion control and perception computing capabilities, and there are significant differences in the number and types of actuators among different manufacturers. In the future, operation and control algorithms may become the core competitiveness of manufacturers, and there is a high possibility of self-development; In addition, the understanding of customer application scenarios and process requirements is also an important factor in the control scheme of humanoid robots. Currently, downstream scenarios are scattered, and it is difficult for a single manufacturer to achieve universality of humanoid robots in various scenarios.

3.3.1 Motion control algorithm: Similar in approach, both are offline behavior libraries and real-time adjustments

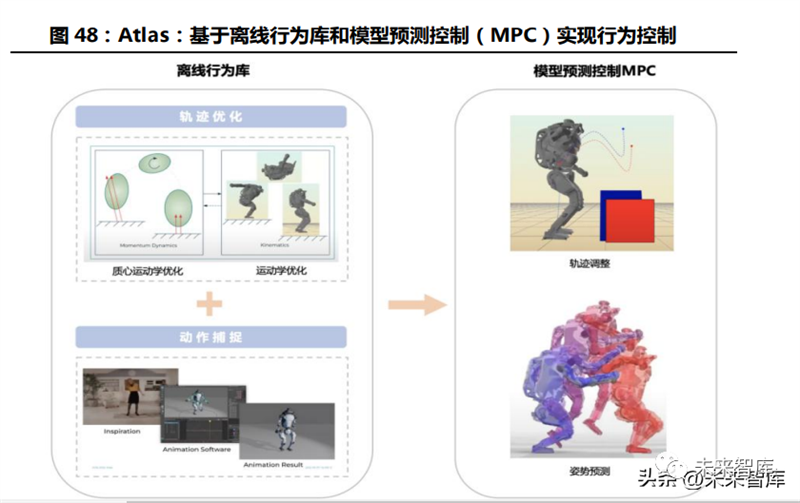

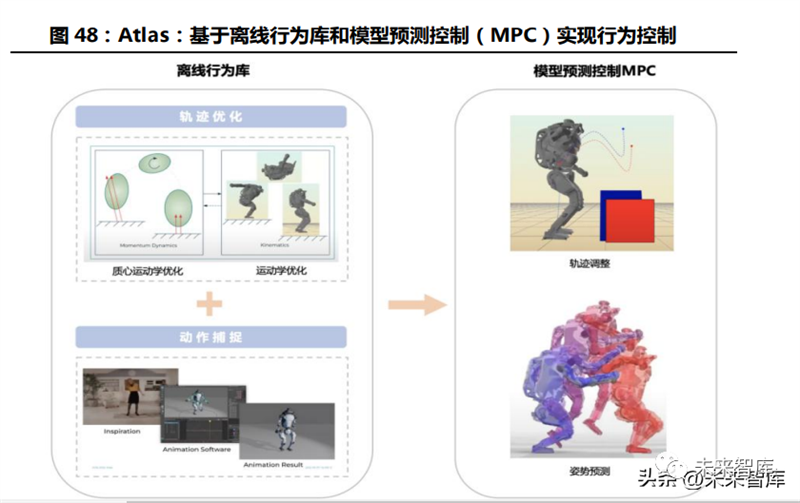

Boston Power Atlas: Implement behavior control based on offline behavior library and Model Predictive Control (MPC). The offline behavior library is created using trajectory optimization algorithms (centroid kinematic optimization+kinematic optimization) and motion capture. Technicians can add new trajectories to the library to add new features to the robot; After the robot is assigned a behavioral target, it selects behaviors from the behavior library that are as close to the target as possible to obtain theoretically feasible dynamic continuous actions. Model Predictive Control (MPC) adjusts the details of some parameters (force, posture, joint action time, etc.) based on the real-time feedback from sensors and the behavior library to adapt to the differences between the real environment and the ideal, as well as other real-time factors. The online control method of MPC allows robots to deviate from the template and predict transition actions between two behaviors (such as jumping and backflip), simplifying the process of creating a behavior library.

Tesla Optimus: The gait planning algorithm has a similar approach to Altas. The motion planner generates a reference trajectory, and the controller adjusts optimization behavior in real-time based on sensor information. The control algorithm is not yet mature in gait control algorithms. In gait control algorithms, the motion planner first generates a reference trajectory based on the expected path and determines the dynamic parameters of the robot model. The controller estimates the robot's posture based on sensor data, corrects the robot's behavioral parameters according to the difference between the real environment and the ideal model, and obtains the true behavior. In addition, between continuous gaits, the algorithm combines the gait state of human walking (initial landing of the foot ->final toe off the ground), combined with coordinated arm swinging movements of the upper body, to achieve natural arm swinging, long strides, and straight knee walking as much as possible, improving walking efficiency and posture. At present, the gait control scheme of robots is not mature enough, with weak anti-interference ability and poor dynamic stability. Tesla technicians have stated that the balance problem of Optimus may take 18-36 months to solve.

Similarly, Optimus upper limb operation utilizes an offline behavior library based on motion capture and inverse kinematics mapping to achieve adaptive operation through real-time trajectory optimization.

3.3.2 Motion controllers: mostly independently designed, with significant differences in requirements among different manufacturers

Humanoid robots collect and process multiple modal data, and their execution mechanisms are much more complex than industrial robots, requiring high real-time computing power and integration of controllers. The types and quantities of sensors for humanoid robots far exceed those of industrial robots, requiring simultaneous completion of 3D map construction, path planning, multi-sensor data collection, collection and operation, and closed-loop control during the movement process. The process is relatively complex, with higher data dimensions and volume than industrial robots, requiring high computing power. Industrial robots are generally recognized and detected through external image acquisition cards and image processing software; The humanoid robot in mobile scenarios requires the image processor to be integrated into the controller chip, and there is a requirement for chip integration. The controllers for humanoid robots are mostly independently designed, and there are significant differences in requirements among different manufacturers. At present, the uncertainty of downstream scenarios for humanoid robots is strong, and there are significant differences in the robot driving schemes (such as driving methods, motor schemes), perception schemes (pure vision, multi-sensor fusion, etc.), and control algorithms developed by different manufacturers. Robots have different requirements for the computing power and storage of controllers, so the composition of controllers varies, with autonomous design being the main approach. We believe that the possibility of using a distributed control system for humanoid robot controllers is high, consisting of a core controller and multiple small controllers, where the small controllers are used to drive the joints of various body regions.

Boston Power Atlas: The robot body is equipped with three industrial control computers responsible for calculating the operation and control system. The controller receives data from LiDAR and ToF depth cameras, generates maps and paths, and plans target behavior based on offline behavior libraries; During the actual movement process, real-time adjustment and optimization of the action sequence are carried out by collecting sensor data such as IMU, joint position, force, oil pressure, temperature, etc.

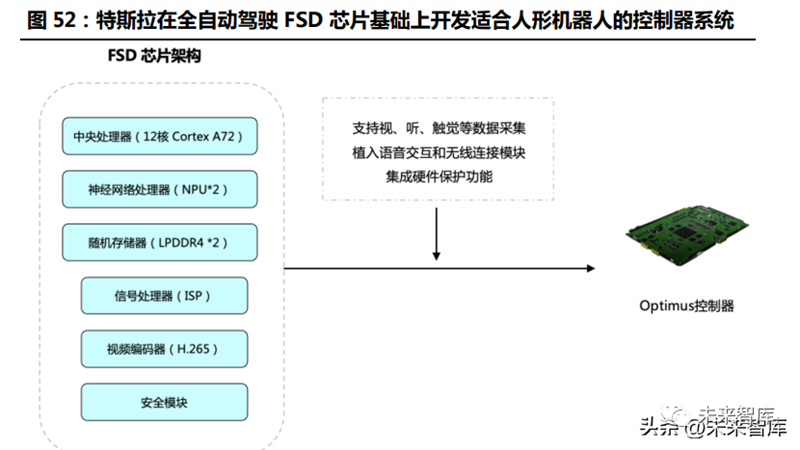

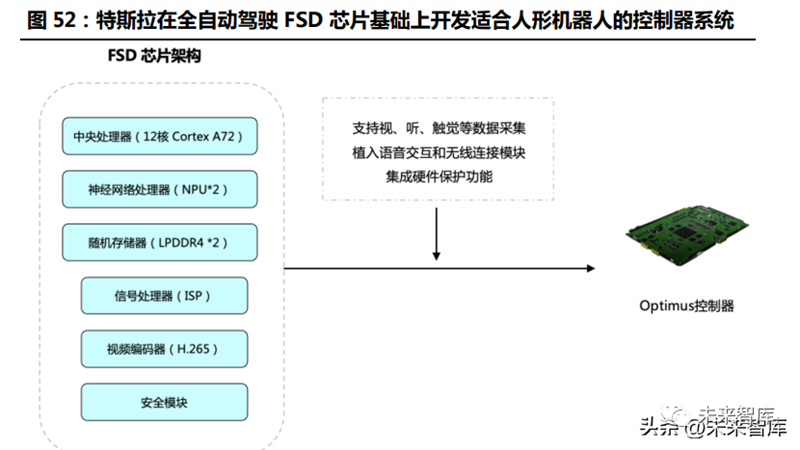

Tesla Optimus: Reuse Tesla's perception and computing capabilities to develop a controller system suitable for humanoid robots based on fully autonomous driving FSD chips. The FSD chip integrates a central processing unit, neural network processor (NPU), image processor (GPU), synchronous dynamic random access memory (SDRAM), signal processor (ISP), video encoder (H.265), and security module, enabling efficient image processing, environmental awareness, general-purpose computing, and real-time behavior control. In order to match the different needs of humanoid robots and cars, the Optimus controller chip has made adaptive modifications on the basis of the FSD chip, adding data acquisition support for multi-modal information input such as visual, auditory, and tactile perception. It has implanted voice interaction and wireless connection modules to support human-machine communication, and has hardware protection functions to ensure the safety of robots and surrounding personnel, thereby achieving behavioral decision-making and motion control.

Note: * This article (including images) is a reprint. If there is any infringement, please contact us to delete it.